The Nehalem CPU has 4 cores and when enabling hyperthreading this becomes 8 CPU "entities" in Linux.

Interrupt affinity is setup to distributed the load among cpu-cores which standard in Bifrost for multi-queue capable cards. eth-affinity is the utility.

We also have an possibility to reserve one ore more CPU's from routing burden to serve other duties routing daemons, network monitoring and ssh etc.

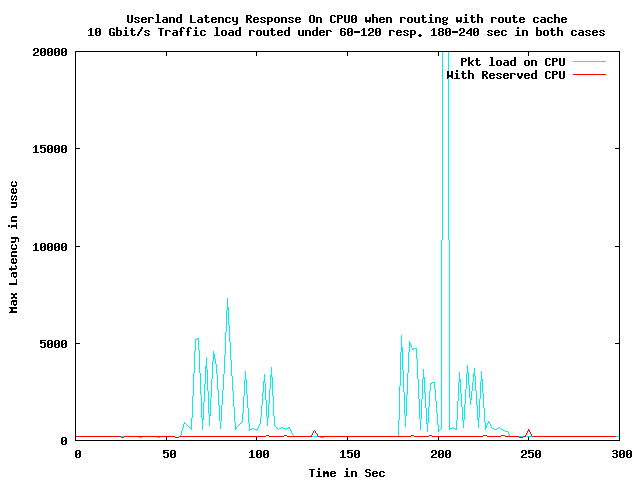

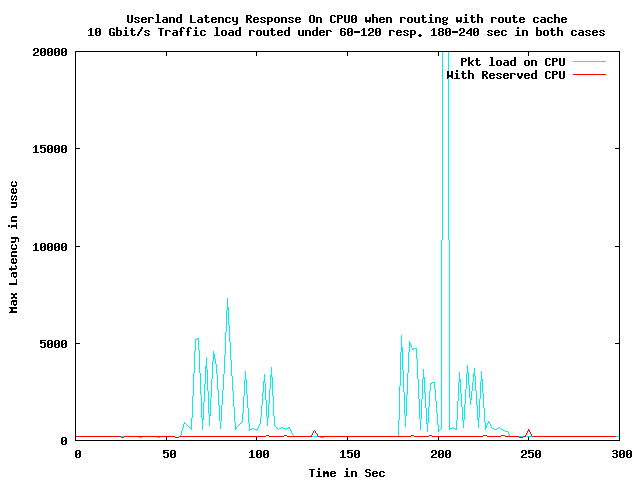

This experiment compares the maximal latency on CPO0 with or without packet load. When we reserve CPU0. CPU1-CPU7 will be consequently do forwarding. Also remember that hyperthreading enabled.

Pktgen was used to inject traffic data according to [REF]. Traffic was injected only between seconds 60-120 and seconds 180-240 see figure. During the other periods the machine was totally idle.

For IRQ-affinity and reservation eth-affinty was used and for measuring latency schedlat utility was used.

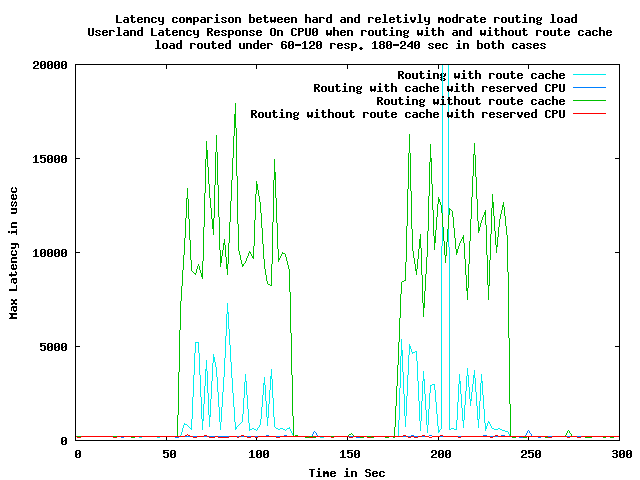

Next experiment is to compare latency when the network load is much harder. For this we disable the routing cache. This means we take the "long path" and lookup every packet the fib routing table. We use the same routing table with 279.00 entries.

We see latency gets higher but still not to bad. We also see when we reserve CPU0 it's totally unaffected and has very low latency.

Simple CPU reservation is easily doable and and can improve latency to give a separation between bulk data handling and control and monitoring applications.

We should mentions that this simple CPU reservation scheme is already in use latest version of bifrost/Linux.

The CPU reservation is achived by assigning the interface cards interrupt vectors so one or more CPU's does not receive network traffic. This is Linux controlled by files under /proc/irq/IV where IV is the interrupt vector for interface RX/TX queue etc. The result can be vieved in /proc/interrupts. Bifrost/Linux includes a utility "eth-affinity" to automatically setup affinity and also to reserve a particular CPU. In example "eth-affinity -r 1" sets up affinity for all CPU and RX and TX interrupt vectors for all CPU-cores but reserves CPU0.

The "taskset" utility furthermore can be used to run application on the reserved CPU. With "taskset -c 0 /usr/sbin/sshd" sshd will run on our "free" CPU0. Of course it could be a good idea also for applications like routing daemons etc. eth-affinity is written by Jens Laas. CPU reservation implies multiqueue capable interface cards.